I’ve tested two options for saving and loading weights. The first option works with a MySQL database, the second saves to a CSV file.

To take a closer look at this issue, you need to refer to my library. The DataBase library was developed by me for working with neural networks. To quickly connect to the database and work with it. This library is available at the link on github https://github.com/scisoftdev/Python/blob/master/Database/Database.py.

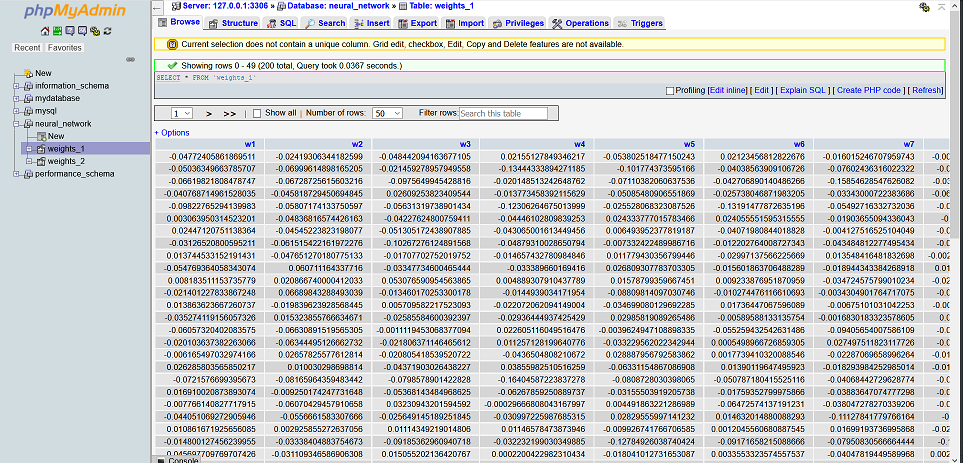

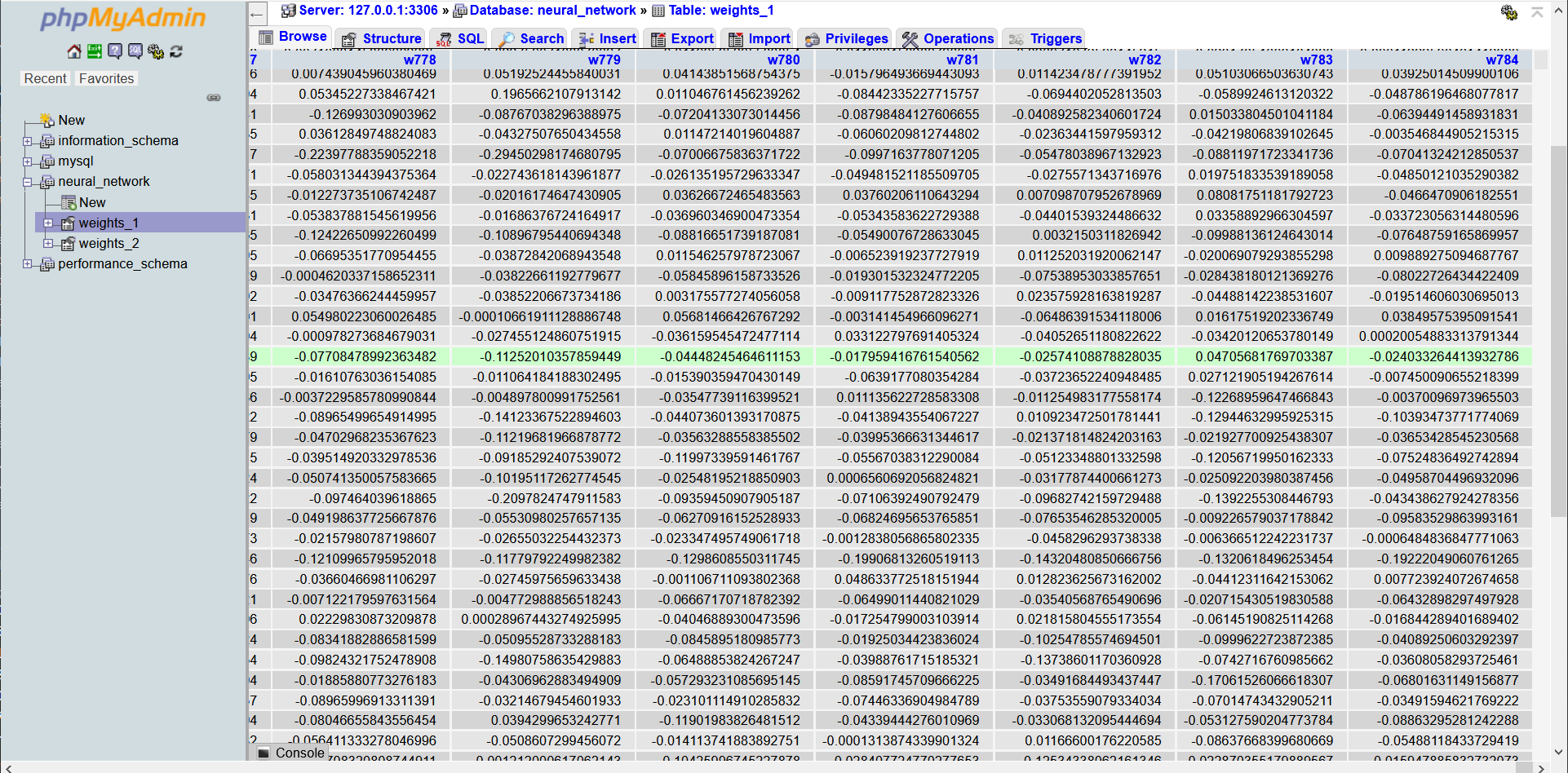

Before saving the weight coefficients, you need to create a database and prepare the tables in which the saving will be performed. The DataBase library can itself create the database if it does not already exist on the server. To do this, just enter the connection data and the name of the database. With tables, things are a little more complicated, since each table stores only one set of weights between the layers. Therefore, if there are four layers, then there will be three sets of weighting factors. I wrote a simple code for this task.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 |

# License GPLv2 from DataBase import DataBase host = 'localhost' user = 'root' password = '' database = 'neural_network' table = 'weights_1' base = DataBase(host, user, password, database) # neural network parameters parameters = [784, 200, 10] name_w = [] # tables_name for i in range(len(parameters)): if i > 0 and i < len(parameters): name_w.append('weights_' + str(i)) print(name_w) weights_n = [] for i in range(len(parameters)): if i < len(parameters): weights = [] for j in range(parameters[i]): if j < parameters[i] - 1: n = 'w' + str(j + 1) + ' DOUBLE,' weights.append(n) else: n = 'w' + str(j + 1) + ' DOUBLE' weights.append(n) weights_n.append(weights) for i in range(len(name_w)): w = " ".join(weights_n[i]) base.createTable_weights(name_w[i], w) |

This program reads the list of parameters after connecting to the database server. According to the specified parameters of the neural network, tables are added to the database.

How it works? For example, a neural network has parameters [784, 200, 10]. In this case, two tables will be created with column names of type wn, where n is the column number. The first table will have 784 columns and 200 rows, and the second 200 columns and 10 rows. The data type for each column will be DUOBLE. The program clearly shows how the sql query for creating tables is formed.

|

1 |

"CREATE TABLE weights` in DOUBLE`" |

For this code to work correctly, it needs to be inserted into the class implementation. And write the initial parameters into an array. However, when testing the program, I did not do this, since the main task was to correctly save the weight coefficients. At this stage, I separately run the program to create tables and store the weights of the neural network. In the future, I will add a function to the program that will save the neural network with all parameters and with any number of layers. At this point, it is worth taking a closer look at some of the useful functions from the DataBase library.

One of these functions is createTable_weights. It contains a special sql query to add a table.

|

1 2 3 4 5 |

# for save the neural network weights def createTable_weights(self, table_name, columns): my_cursor = self.base.cursor() my_cursor.execute("CREATE TABLE " + table_name + '(' + columns + ')') |

The name_w array stores the table names, which are then used in the createTable_weights function.

To write the obtained weights into the prepared tables, you need to use the following functions.

|

1 2 3 |

table_list = base.showColumnInfo(database, 'weights_1') column_info = base.getColumnInfo(table_list, 0) base.insertInto('weights_1', w_1, column_info) |

The showColumnInfo function creates an array containing complete information about all the columns in the table. To implement a sql query to add data to the database, you need to know what columns exist in the table. The getColumnInfo function gets the name of the column at index 0 in the array. The last function is insertInto which writes data to the table. If you need a more detailed description of the DataBase library, please write in a comment.

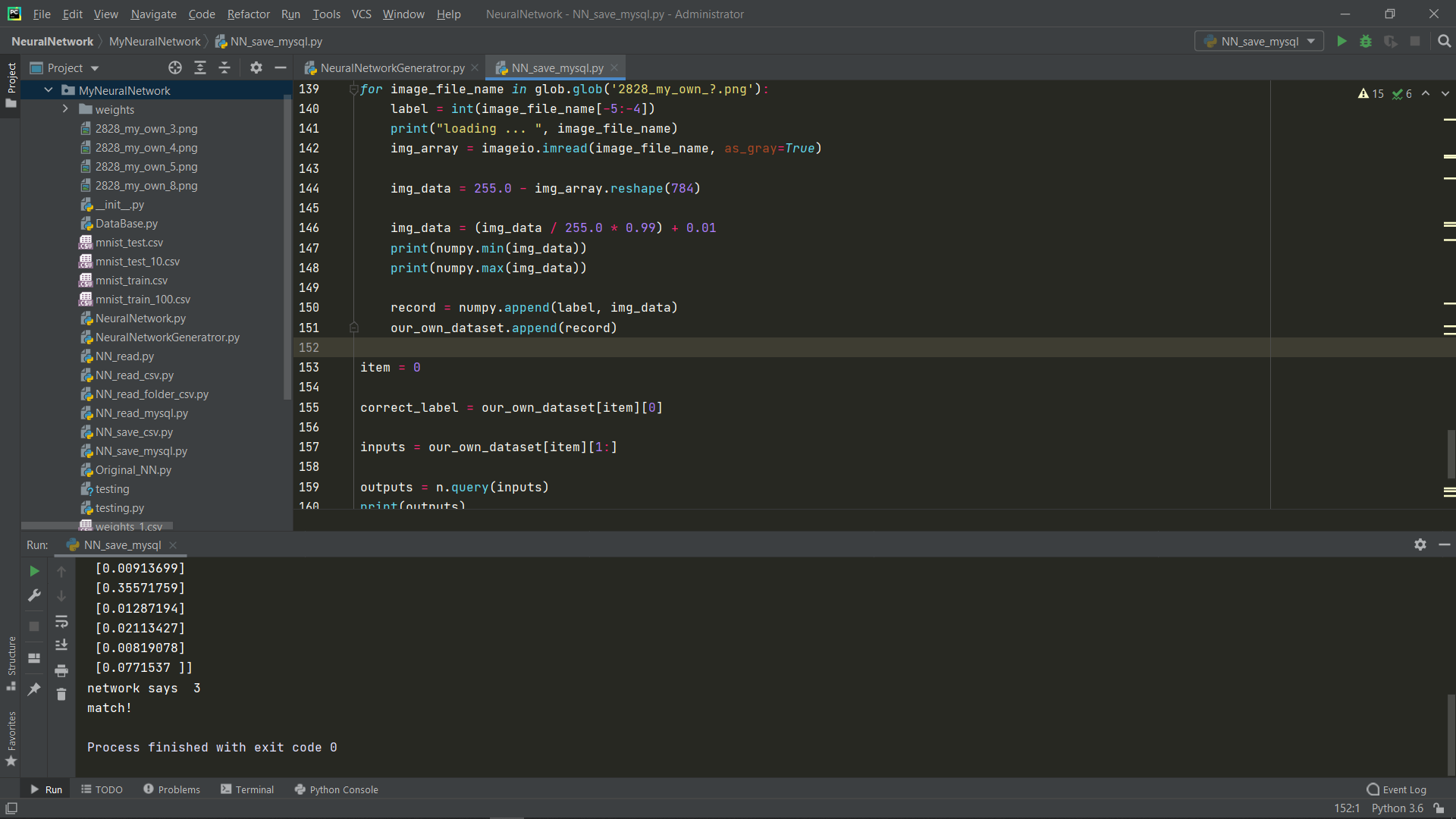

For a program that uses source code, the query function looks like this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 |

def query(self, inputs_list): inputs = numpy.array(inputs_list, ndmin=2).T hidden_inputs = numpy.dot(self.wih, inputs) hidden_outputs = self.activation_function(hidden_inputs) final_inputs = numpy.dot(self.who, hidden_outputs) final_outputs = self.activation_function(final_inputs) w_1 = self.float_type(self.wih) w_2 = self.float_type(self.who) # because the timeout from the moment of connection is long and the connection ends # before writing to the database host = 'localhost' user = 'root' password = '' database = 'neural_network' base = DataBase(host, user, password, database) table_list = base.showColumnInfo(database, 'weights_1') column_info = base.getColumnInfo(table_list, 0) base.insertInto('weights_1', w_1, column_info) table_list = base.showColumnInfo(database, 'weights_2') column_info = base.getColumnInfo(table_list, 0) base.insertInto('weights_2', w_2, column_info) return final_outputs |

The complete code of the neural network that saves the weights to the database is available at the gihub link https://github.com/scisoftdev/Python/blob/master/Save_weights_of_NN/NN_save_mysql.py.

And here:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 |

# License GPLv2 import numpy import math import glob import imageio from DataBase import DataBase class neuralNetwork: def __init__(self, inputnodes, hiddennodes, outputnodes, learningrate): self.inodes = inputnodes self.hnodes = hiddennodes self.onodes = outputnodes self.wih = numpy.random.normal(0.0, pow(self.inodes, -0.5), (self.hnodes, self.inodes)) self.who = numpy.random.normal(0.0, pow(self.hnodes, -0.5), (self.onodes, self.hnodes)) self.lr = learningrate self.activation_function = lambda x: 1 / (1 + (math.e ** (-x))) def train(self, inputs_list, targets_list): inputs = numpy.array(inputs_list, ndmin=2).T targets = numpy.array(targets_list, ndmin=2).T hidden_inputs = numpy.dot(self.wih, inputs) hidden_outputs = self.activation_function(hidden_inputs) final_inputs = numpy.dot(self.who, hidden_outputs) final_outputs = self.activation_function(final_inputs) output_errors = targets - final_outputs hidden_errors = numpy.dot(self.who.T, output_errors) self.who += self.lr * numpy.dot((output_errors * final_outputs * (1.0 - final_outputs)), numpy.transpose(hidden_outputs)) self.wih += self.lr * numpy.dot((hidden_errors * hidden_outputs * (1.0 - hidden_outputs)), numpy.transpose(inputs)) # def float_type(self, numpy_list): # n = [] # for i in numpy_list: # m = [] # n.append(m) # for j in i: # j = float(j) # m.append(j) # return n def float_type(self, numpy_array): n = [] for i in numpy_array: n.append(i.tolist()) return n def query(self, inputs_list): inputs = numpy.array(inputs_list, ndmin=2).T hidden_inputs = numpy.dot(self.wih, inputs) hidden_outputs = self.activation_function(hidden_inputs) final_inputs = numpy.dot(self.who, hidden_outputs) final_outputs = self.activation_function(final_inputs) w_1 = self.float_type(self.wih) w_2 = self.float_type(self.who) # because the timeout from the moment of connection is long and the connection ends # before writing to the database host = 'localhost' user = 'root' password = '' database = 'neural_network' base = DataBase(host, user, password, database) # neural network parameters parameters = [784, 200, 10] name_w = [] # tables_name for i in range(len(parameters)): if i > 0 and i < len(parameters): name_w.append('weights_' + str(i)) print(name_w) weights_n = [] for i in range(len(parameters)): if i < len(parameters): weights = [] for j in range(parameters[i]): if j < parameters[i] - 1: n = 'w' + str(j + 1) + ' DOUBLE,' weights.append(n) else: n = 'w' + str(j + 1) + ' DOUBLE' weights.append(n) weights_n.append(weights) for i in range(len(name_w)): w = " ".join(weights_n[i]) base.createTable_weights(name_w[i], w) table_list = base.showColumnInfo(database, 'weights_1') column_info = base.getColumnInfo(table_list, 0) base.insertInto('weights_1', w_1, column_info) table_list = base.showColumnInfo(database, 'weights_2') column_info = base.getColumnInfo(table_list, 0) base.insertInto('weights_2', w_2, column_info) return final_outputs input_nodes = 784 hidden_nodes = 200 output_nodes = 10 learning_rate = 0.3 n = neuralNetwork(input_nodes, hidden_nodes, output_nodes, learning_rate) training_data_file = open("mnist_train.csv", 'r') training_data_list = training_data_file.readlines() training_data_file.close() epochs = 5 for e in range(epochs): for record in training_data_list: all_values = record.split(',') inputs = (numpy.asfarray(all_values[1:]) / 255.0 * 0.99) + 0.01 targets = numpy.zeros(output_nodes) + 0.01 targets[int(all_values[0])] = 0.99 n.train(inputs, targets) our_own_dataset = [] for image_file_name in glob.glob('2828_my_own_?.png'): label = int(image_file_name[-5:-4]) print("loading ... ", image_file_name) img_array = imageio.imread(image_file_name, as_gray=True) img_data = 255.0 - img_array.reshape(784) img_data = (img_data / 255.0 * 0.99) + 0.01 print(numpy.min(img_data)) print(numpy.max(img_data)) record = numpy.append(label, img_data) our_own_dataset.append(record) item = 0 correct_label = our_own_dataset[item][0] inputs = our_own_dataset[item][1:] outputs = n.query(inputs) print(outputs) label = numpy.argmax(outputs) print("network says ", label) if (label == correct_label): print ("match!") else: print ("no match!") |

the float_type function converts the float64 datatype to a database-readable DOUBLE type. Otherwise, an error occurs.

In the future, I will describe how to save the neural network weights to a CSV file. It should be noted that saving and using CSV files in the future is more convenient than MySQL. I already have a code for a neural network that can work with a variable number of layers with saving and loading weights, as well as saving metadata of the neural network.

Fine! It works!